Linjär Algebra

For part (a) I found that

λ = 2

λ = √(a + 1,25) + 2,5 and

λ = -√(a + 1,25) + 2,5

For part (b) do I need to substitute each λ in null(A-λI) and find the eigenvectors? If I do this, row reducing will be very complicated.

Is there a simpler way of looking at this question?

The question doesn't ask you to find the eigenvectors. It just asks for what values of a is there an orthogonal basis in made up of the eigenvectors of A.

I believe there is a theorem that states if you have unique eigenvalues then you have unique eigenvectors. With this I would choose all a that gives three unique eigenvalues.

But there might be a weaker demand that you can use! My theorem is maybe to demanding so you miss some valid value of a.

Like when a =-1.25 then two lamba values are the same, maybe check this special case and see if you get three unique eigenvectors anyway. if you can find three unique eigenvectors to this then combined with my above claimed theorem for a >=-1.25 will give three unqiue eigenvectors which is an orthogonal basis for . Then some arguement about the complex eigenvalues are needed (a<-1.25).

So, if a matrix has 3 unique eigenvectors, these vectors automatically form an orthogonal basis for R3?

I checked λ2,3=2.5 and it only gives me 1 vector. So a=-1.25 only gives me 2 unique vectors.

Nox_M skrev:So, if a matrix has 3 unique eigenvectors, these vectors automatically form an orthogonal basis for R3?

I believe three unqie real eigenvalues guarantees three unique real eigenvectors.

Nox_M skrev:I checked λ2,3=2.5 and it only gives me 1 vector. So a=-1.25 only gives me 2 unique vectors.

Good then a=-1.25 is excluded atleast!

I understand that three unique real eigenvalues guarantee three unique real eigenvectors. But what guarantees that the 3 eigenvectors are orthogonal?

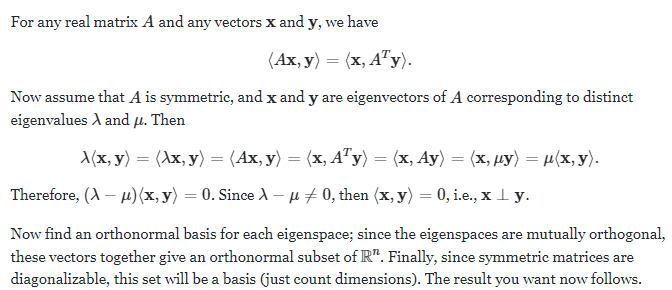

Nox_M skrev:I understand that three unique real eigenvalues guarantee three unique real eigenvectors. But what guarantees that the 3 eigenvectors are orthogonal?

I did look around a bit and I can only find proof that it is the case if the matrix is symmetric. So I can only guarantee you when a=1 with the theorem I thought about. Then you will have 3 unique orthogonal eigenvectors. :D

That makes sense. Can you link the theorem?

Nox_M skrev:That makes sense. Can you link the theorem?

The brackets are just scalar products here.

The brackets are just scalar products here.

Thank you! : )

Nox_M skrev:Thank you! : )

Best of luck I will check in later and see if there are any advancements. There are infinite cases still to be investigated. :,( To get a feel for it I would try a few cases with 3 real eigenvalues and see if I get orthogonal eigenvectors or not. To know which direction you wanna try and prove ;)

That sounds great. I researched a little and found that a matrix is orthogonal only if the matrix is symmetric. So maybe that's the only case but I am not 100% sure

Tillägg: 16 apr 2022 22:08

I meant to say 'the eigenvectors are orthogonal only if the original matrix is symmetric.'

Only one thread per question is allowed. This thread will be locked. Please continue in your first thread, if you still need help. /moderator